Wisconsin’s Epic entanglement with AI in healthcare

Our homegrown medical-records giant and healthcare systems are ushering in a new wave of technology at the expense of nurses and patients.

Our homegrown medical-records giant and healthcare systems are ushering in a new wave of technology at the expense of nurses and patients.

Far from the rolling hills of Epic Systems’ campus in Verona, Wisconsin, hundreds of registered nurses—including members of the country’s largest nurses union, National Nurses United—held a protest at Kaiser Permanente’s (KP) San Francisco Medical Center in April 2024. Despite ongoing concerns about understaffing and burnout, the nurses were focused on an entirely new threat looming over the healthcare industry—artificial intelligence (AI)—as the culprit of worsening working conditions and patient care.

The protest tapped into healthcare workers’ growing resentment toward AI technology in hospitals—technology that Epic, Wisconsin’s homegrown healthcare-tech giant, is pushing around the world, with help from large local healthcare systems like UW Health. As AI penetrates the American healthcare system, healthcare workers fear that it will establish an unclenching hold over administrative processes, hospital workflow, and, ultimately, patient care. Already, some of these fears have come to fruition, and the result is a dangerous downward spiral toward a dysfunctional healthcare culture, upended clinician-patient relations, and profit at the cost of lives.

Patients share these concerns. A Pew Research survey from 2023 found that 60% of Americans would be uncomfortable with healthcare providers relying on AI “to do things like diagnose disease or recommend treatments,” highlighting the general public’s apprehension toward AI-integrated healthcare.

Critics of the current trend of aggressively marketed AI technology aren’t targeting already ubiquitous software tools, like basic algorithms, but instead focus on the intensified commercialization of generative artificial intelligence. In the context of healthcare, AI has been integrated into electronic healthcare records (EHR) systems and is often trained by patient data. “In the U.S., somewhere between 81-94% of patients have at least one medical record stored” in Epic’s EHR systems, health-data startup Particle Health claimed in a federal antitrust lawsuit it filed against Epic on September 23.

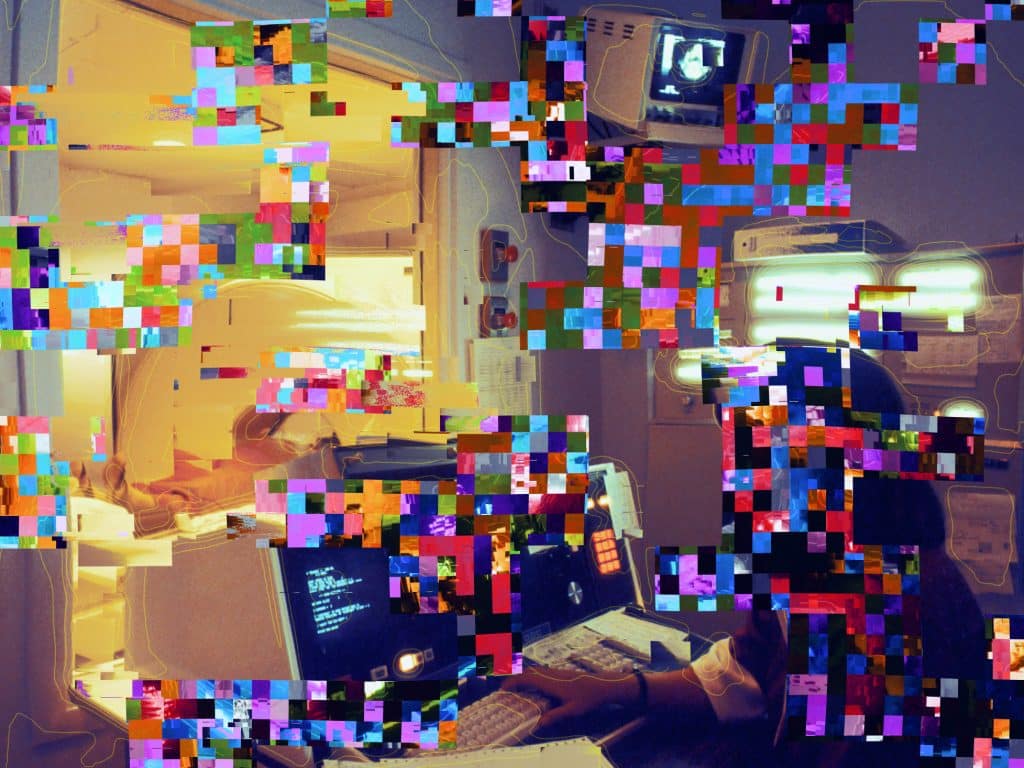

Epic has spearheaded the effort to incorporate AI into hospitals. The company began rolling out its AI-integrated technology in May 2023 after announcing a collaboration with Microsoft and has since claimed that it has over 60 “significant development projects” underway in AI. Epic has shared little detail about these projects, leaving unanswered crucial questions about how the company’s AI algorithmic technology functions, and how patient and hospital data will be collected and interpreted.

The opaque invasiveness of this technology, coupled with Epic’s monopolistic control over Americans’ health records, alarms nurses and patients and begs ethical questions about AI in hospitals. As AI-integrated technology began appearing in some hospitals nationwide, National Nurses United reported that nurses were experiencing tangible negative effects: unsafe low staffing levels, unpredictable schedules for nurses, inaccurate assessments of the severity of patients’ medical conditions, and falsely summarized patient notes.

Staffing can be controlled by AI algorithmic software that monitors the number of patients in a facility and the severity of their medical conditions. Depending on the data, it then predicts how many nurses and nursing assistants should be on staff attending to patients. Additionally, AI software can now summarize clinicians’ notes on patients, which are then sent electronically to other clinicians, replacing the traditional “hand-off” system built upon actual clinician-to-clinician interactions. In the context of untested and unregulated technology use and cost-cutting incentives, there is a lot of room for things to go wrong. And despite the blatant optimism of tech health developers and administrators, there have already been signs of detrimental, wide-ranging consequences.

An article in the June 2024 issue of National Nurses United’s magazine, National Nurse, reported that the most pressing problem is that AI-driven systems tend to misclassify the severity of a patient’s conditions, or acuity, in EHR systems. This error leads to critically low staffing levels, jeopardizing patient safety. AI within EHR systems also tracks nurses’ charting to determine staffing levels, but doesn’t consider nurses’ holistic expertise in patient acuity, or the time and care of double-checking by additional nurses.

Nurses at several Kaiser Permanente facilities in California told National Nurse that Epic’s AI did not properly account for the work hours it takes to administer treatments like continuous bladder irrigation or intravenous immunoglobulin. As reporter Lucia Hwang explains in the piece, these procedures “require nurses to be constantly monitoring and entering the patient’s room frequently.” Because Epic’s AI didn’t understand how time-consuming this work is, it underestimated the staffing levels these facilities needed.

“I don’t ever trust Epic to be correct,” Craig Cedotal, a pediatric oncology RN at Kaiser Permanente Oakland Medical Center, told National Nurse. “It’s never a reflection of what we need, but more a snapshot of what we’ve done.”

A page on Epic’s website touting its AI projects says that “AI technology is improving healthcare and has great potential to do much more.” The “much more,” as the page goes on to explain, includes industry-jargon-filled objectives: “Identify clinical risk adjustment opportunities,” “Automate your revenue cycle,” “streamline discharges,” and “forecast your capacity and staffing needs.” If these words seem vaguely all-encompassing, that’s because Epic’s AI technology is supposed to be just that—painting a clearer picture of the company’s ambitious trajectory toward domination.

Hospitals continue to implement AI healthcare management technology—and give it authority in clinical settings—at an alarming rate. In a nationwide winter 2024 survey of 2,300 RN members, National Nurses United discovered that about 40% reported that their employers had “ introduced new devices, gadgets, and changes to the electronic health records (EHR) in the past year,” but “60 percent of respondents disagreed with the statement, ‘I trust my employer will implement A.I. with patient safety as the first priority.’” About half said their facilities use “algorithmic systems” to determine patient acuity, but about two-thirds of nurses said computer-generated acuity measurements did not match their real-world assessments. Almost a third said they could not override the system when they disagreed with decisions the AI made.

In response to Tone Madison’s questions about nurses’ criticisms of Epic’s AI systems, an Epic spokesperson said, “Nurse input is at the core of our development and implementation processes from the earliest stages,” and that they “encourage customers to have nurses guide their ongoing use of the software.” Epic gave an almost identical statement in June to Becker’s Hospital Review as a response to National Nurses United’s criticisms.

Mariah Clark, a nurse in the emergency department at UW Hospital’s main campus on Madison’s west side, says her hospital is ramping up to implement more AI technology, including a precision staffing model, AI-generated shift summary notes, and AI that listens in on patient interviews—all similar technology that nurses nationwide have been dealing with. She is also an active member of UW Health’s resurgent nurses union, part of the Service Employees International Union’s Wisconsin chapter.

“Technology is great, but I don’t have any great trust in corporations and for-profit stuff,” Clark says. “I’m worried about AI listening to whole interviews, because I don’t know if I want to tell my doctor the same stuff if someone like Elon Musk were getting a hold of it.”

Clark says that Epic’s E-chart system MyChart is efficient, but worries that it could take over tasks only a human healthcare worker could properly carry out.

“It cannot recreate what a nurse can see, what a nurse can feel with their hands,” Clark says.

Although healthcare workers must interact with Epic’s AI technology in their daily work, they are not the company’s customers; major hospitals and healthcare institutions are.

Nurses say their input continues to be ignored because Epic and the hospital industry are more driven to sell a product and increase profit than to improve patient care.

In 2023, Epic’s revenue increased to $4.9 billion, up $300 million from the previous year. The company has been rapidly diversifying its business, from working with medical device manufacturers and health insurers to marketing new tech featuring its software. For example, the company added a Fall Predictive Analytics Tool that aims to detect patients’ fall risk, and in-room hardware, including smart TVs and tablets equipped with MyChart.

The rise of AI in healthcare also accompanies any number of cost-saving measures that introduce more surveillance and new indignities for patients.

Clark recalls a recent conversation with a patient. “When you read about AI technology they talk about using sensors and cameras to help get that nursing picture,” she says. “But then you’re losing privacy. Think about all the intimate care we do for patients. We see and touch every part of your body with respect and privacy. We can’t control those cameras. The patient was discussing how when their bed was put on lock, a big camera had been wheeled into the room.”

Clark says the patient described this technology as a “video sitter.” The device, which resembles the antitheft robots that surveil department stores, can be wheeled around and usually sits in a corner. It sends video to a remote monitoring station where a person watches its feed, all while simultaneously monitoring several other patients. If needed, the operator can also talk to the patient through a speaker on the device.

“I can see this as an invasion of a person’s privacy,” Clark says. “They don’t know that there’s a person on the other end and it’s not someone they perceive as being a part of their care team. It’s not their nurse. It’s not their doctor. It’s no one they’ve met. It might not even be someone in the same building.”

Clark adds that the “video sitter” is sometimes used to justify eliminating bedside staff. Previously, hospital staff would employ methods like bedside alerts that reduce invasiveness.

“We would have used a person who would sit with them, and just be there to remind them to stay in bed instead of having this large, dark robot in the corner,” Clark says. “It’s a justification for both less human touch in an already terrifying situation and taking away staffing. It’s a justification for fewer nursing assistants and fewer patient safety techs.”

Clark says there may be a place for remote monitoring in the future of healthcare, but that its current iteration needs to change.

“A lot of places in healthcare and in general are leaping on this kind of technology a little too enthusiastically without considering the ramifications,” she says.

Beyond the hospital, the health insurance industry is similarly employing AI technology, working in tandem with hospital administration and tech healthcare companies to cut costs.

Christine Huberty, an attorney at the Center for Medicare Advocacy, a non-profit focusing on Medicare and Medicare law, has been advocating for AI regulation in the healthcare industry and representing individuals who have suffered from AI cost-cutting practices since 2019. She previously worked as a benefits specialist supervising attorney for the Madison-based Greater Wisconsin Agency for Aging Resources Inc. (GWAAR). While in her position at GWAAR, Huberty and her team began receiving a wave of appeals from individuals in nursing facilities. In each case, the patient had been to the hospital and required rehab before returning home. However, their privatized Medicare Advantage plans denied coverage while they were still recovering in facilities. The health insurance companies claimed the patient was ready to be released despite clinicians advising against it. Why were health insurance companies so confident in telling patients they no longer needed care when clearly this wasn’t the case?

The culprit, Huberty discovered, was nH Predict, a proprietary tool developed by naviHealth, a since-rebranded subsidiary of insurance giant UnitedHealthcare. The tool uses an AI algorithm to determine a patient’s discharge date (down to the decimal point) based on a data pool of other patients with similar conditions. Health insurance companies claim the tool only technically “advises” patients’ reviewing teams, but based on her experience representing patients, research, and discussions with health insurance employees, Huberty knows this isn’t true. She says the tool acts as a default when the final reviewer doesn’t have time to fully look at the claim and is incentivized to cut “unnecessary” costs. The patient’s doctor cannot override the tool’s inaccurate discharge date or fight back against the insurance’s denial. The patient is faced with the harrowing decision to either pay out of pocket for the rest of their treatment or go against the advice of their care team and risk stopping treatment altogether. Though Huberty first recognized this issue in Wisconsin, she says it’s occurring with health insurance companies across the country.

“You’re a cog in the wheel, you’re data. You’re not being treated as a person. You are a number, and you’re some medical records, maybe. So you have to do everything in your power to make sure that they know your personal story and that you are fighting to get the care that you need,” Huberty says.

According to Huberty, the NH prediction tool tends to discriminate against certain, marginalized patients—for instance denying coverage to patients who are low-income, over 80 years old, or have mental impairments. Huberty says that after denying these patients privatized coverage, the insurer will then try to steer them toward Medicaid, effectively dumping costs back onto the states. The process of challenging the denial becomes the patient’s responsibility, who must fight while enduring the physical, mental, and emotional distress of ongoing treatment.

In addition to the underlying dynamics of for-profit players, AI as a technology poses its own ethical dilemmas.

Karola Kreitmair, a professor in the Department of Medical History and Bioethics at UW-Madison and a clinical ethics consultant at UW Hospital and American Family Children’s Hospital, says that algorithmic bias and opacity are major concerns facing the field.

“There’s a lot of data that gets fit in that might lead to patterns that can perpetuate discriminatory practices,” Kreitmair says. “What do we actually want from AI? And if we want AI that’s morally superior to how human society operates, then how do we engineer an AI that yields us this?”

Algorithmic opacity refers to the inherent difficulty of understanding how algorithms analyze data and make decisions. Algorithms, and the AIs that use them, don’t operate in a way that parallels familiar logical human thought processes, Kreitmair explains. This creates what Kreitmair calls a “black box” around exactly why an AI tool makes a given decision about, say, what kind of care a given patient needs. This, combined with algorithms’ potential to perpetuate bias, raises more fundamental questions about the purpose of AI in hospitals.

Gamifying health

One of Epic’s most touted AI innovations is a generative AI chatbot that imitates healthcare workers and drafts responses to patients. The company brands it as ART, or augmented response technology. This seemingly harmless, ChatGPT-esque chatting feature reveals the paradoxical relationship between healthcare workers and privatized tech management. If patient-clinician—and therefore, human—values are supposed to be at the heart of this technology, then why insert a robot mediator? Who would want to discuss sensitive medical information and seek counsel from an AI chatbot and not another qualified human being?

As it turns out, Epic’s marketing strategies do not pair well with the intuitive logic of healthcare.

In a November 2023 interview, Epic’s Executive Vice President of Research and Development, Sumit Rana, referred to an AI-generated response as a more “empathetic” alternative that helps clinicians overcome “writer’s block” when communicating with patients. Relaying an anecdote, Rana told Healthcare IT News: “There was one early example where there was a patient who had been on vacation on a different continent, I think in Europe, and had sent a message. And the AI not only drafted a response, but one of the things the response included was something along the lines of, and I’m paraphrasing, ‘I’m sorry you’re going through this, I hope your vacation has been good.’ And the physician said, “Boy, I don’t know if in my busy time I would have written that.’ But it does in fact enhance that human connection, and it’s a good thing to write.”

Epic’s founder and CEO, Judy Faulkner, echoed this idea in a speech during Epic’s annual Users Group Meeting conference in August 2024: “It saves clinicians about half a minute a message, and that can add up. And importantly, patients say they like it, and many prefer it. ART’s responses are often more empathetic than the very busy doctors. I think that’s kind of funny. The machine is more human than the human.”

Kreitmair says this type of language in healthcare tech marketing is problematic.

“It’s false that chatbots are empathetic,” Kreitmair says. “We crave that empathetic response, where the developers are now calling what they’re doing empathetic in order to fulfill that purpose. But clearly there is no way it can be empathetic because the actual phenomenon of empathy requires things like having feelings. AI doesn’t have any feelings.”

“People are going through a really hard time, especially when you have a complex medical situation,” Kreitmair adds. “It’s highly traumatizing, and so what you want is empathy, and then to say, ‘Here, you have this. Here’s this empathy.’ It just devalues the actual relationship that this is built on, which is a relationship between two humans, a patient and a clinician.”

Epic’s strange marketing assertions and the company’s voracious drive to break into new markets point to one of its core philosophies—gamification.

Gamification broadly refers to the application of game-design elements like point systems, badges, and leaderboards—all that’s fun, competitive, and addictive about games—to non-game activities and technology. It lends to theories of marketing and behavioral science that reality—and in this case, the reality of healthcare—is inherently dull or boring. People can be manipulated into experiencing a new reality that is colorful, simple, and—most importantly—skewed.

This also allows tech companies like Epic to harness productivity from workers and customers alike who are drawn to the technology’s exciting and interactive aesthetics. It also creates distance from the real world of healthcare—understaffed hospitals, mistreated patients, and the very real power structures that govern our well-being.

Once gamification is woven into this technology, a fundamental understanding of human impact is lost.

At Epic’s “Storytime”-themed Users Group Meeting conference in August 2024, Faulkner asked attendees if they had played wildly popular New York Times games like Wordle and Spelling Bee. “That’s what we’re trying to do for our software,” she said. “Figure out how to turn them into a game, to make them more fun, to learn. You ain’t seen nothing yet.”

One only has to visit Epic’s campus to see how much fantasy is embedded in its pursuits. Every day, on their way to work, Epic employees shuffle past off-brand Disney attractions, realms of grandeur such as “Wizard’s Academy,” “Deep Space,” and the gleaming “Emerald City.” But all it takes is pulling back the curtain to reveal the wizardry as mere corporate trickery.

How we got here

How does a healthcare industry arrive at replacing human expertise and human interactions as a “fix-all” solution to understaffing and burnout?

First, a healthcare system riddled with complex yet ineffective AI technology has roots in the creation of EHR technology itself. The push for a switch from paper to electronic medical records gained traction by the early 1990s, as computer hardware became more affordable and the Internet provided the possibility of easily accessible healthcare information. Faulkner, who has an academic background in computer science and notably not healthcare, had already been developing the technological infrastructure for EHRs since 1979, foreseeing a move to privatize national healthcare records. By the time the government provided hefty incentives for EHR installation with the HITECH Act after the 2008 financial crash, Epic was ripe to dominate the hyper-commercialized market.

It’s important not to overlook the seismic change tech healthcare companies like Epic and its competitor Cerner—which the gigantic tech company Oracle acquired for $28.4 billion in 2022 and has since renamed Oracle Health—brought on. Gaining ownership of an essential aspect of modern healthcare, Epic had achieved something inconceivable to market capitalists 50 years prior—the privatization of patient data, and the endless, future ways that data could be exploited for more profit. This context helps explain how and why a company originally only built to hold electronic medical records has branched out to manage clinicians’ tasks, offering products like AI listening technology that generates notes during patient appointments or inserts itself in a patient’s healing process through a smartphone app.

AI technology is the latest extension of the industry’s desire for infinite economic growth. But what is to be done when the only proposed option to improve the healthcare system is one that ultimately deteriorates it? With the incredible amount of power Epic wields, no one can stop the company from selling solutions to the problems it exacerbates. AI implementation then seems to be a mechanism to further capture, through management and surveillance, previously untapped aspects of care. Recent criticisms of the AI tech wave have sounded the alarm about the potential for AI that enables for-profit companies and corporations to increasingly exploit personal information and reinforce harm done to individuals with marginalized identities. To what extent is our healthcare system beholden to Epic’s technological marketing whims? The answer appears to be: to a great extent.

But how can we carve out a new path for technological innovation in healthcare? Maybe the answer doesn’t begin with technology but with how we care about care.

Putting care in healthcare

In the face of unchecked corporate oversight, researchers, advocates and nurses alike fight to safeguard quality healthcare and the safe, regulated implementation of new technology.

“Let’s do the research,” Kreitmair says. “Does it lead to better results or not? We have very good strategies for doing scientifically rigorous research, and there’s no reason why we can’t do that with the implementation of AI in various areas. This research doesn’t really exist on a big scale yet. When it comes to healthcare and medicine, we’re talking about patients’ lives. We’re talking about their well-being.”

In July, Microsoft announced that it would partner with UW Health and UW-Madison’s School of Medicine and Public Health (along with Boston-area healthcare system Mass General Brigham) to begin integrating Microsoft’s Azure AI platform into medical imaging.

By partaking in the AI wave, universities and healthcare systems will have to grapple with risks of inaccuracy. One study published in Nature Medicine in May found that AI assistance unpredictably affected radiologists, aiding some while hindering others. UW-Madison researchers recently published a paper warning against an overreliance on machine-learning AI technology in genetics research. They found that machine-learning AI employed in genome-wide association studies to bridge data gaps can result in faulty predictions.

This collaboration also marks, for Microsoft, a new frontier for profit-making and a major entrance into the healthcare industry. The company’s server products and cloud services revenue increased 21% in the past fiscal year. Health systems spend an estimated $65 billion annually on imaging alone.

The only thing left to consider is a future where AI technology sustainably improves healthcare.

“We need to maintain the human at the center of medicine and healthcare and build AI that supports human-centered healthcare rather than undermines it. We have to be extremely thoughtful in bringing AI into the clinic and health records,” Kreitmair says.

On an individual level, continuous advocacy and demanding accountability from companies abusing AI has the potential to spark meaningful changes. After testifying before the Senate Health Committee in late 2023, Huberty had noticed a decrease in the appeals GWAAR received. She attributes this to the intensified scrutiny of health insurance companies and Livanta, the quality improvement organization in Wisconsin responsible for reviewing appeals.

“Do I think that the insurance companies changed course? Not really. Did more awareness come about? Absolutely. There were a lot of different news stories and things locally to where people were empowered to say ‘no, we actually should appeal these. We do have rights. They are just blanket denials. They’re not looking at my individual case.’ We just know to appeal these now. I think that pushback has helped too, but there’s a lot more work to do,” Huberty says.

Clark believes that unionizing could help solve the problem of nursing burnout and would be much more cost-effective than investing in AI technology or hemorrhaging money to pay traveling nurses to staff hospitals. If the union had a contract that ensured nurses would be a part of AI decision-making, she and her coworkers would more openly embrace the new technology.

“We’ve seen UW bring in outside consultants before. I remember they once compared patient care to making sandwiches at a Subway, [saying] patient care and staffing should be more efficient and measured like that. Humans are not sandwiches. We are taking care of people, not problems,” Clark says.

“The corporate, profit-driven aspects of generative AI as well as the environmental impacts are absolutely horrifying to me,” Clark says. “AI as a tool is not. I think we can and should use appropriate technology to make our lives easier. That’s what AI should be doing, making things easier and being an adjunct to human knowledge and judgment, and not a replacement of it.”

Correction (Feb. 14, 2025): The initial version of this story stated that Particle’s antitrust lawsuit against Epic claims that Epic “controls between 81% and 94% of Americans’ health records.” The filing actually states that between 81% and 94% of patients in the U.S. have at least one record stored in Epic’s EHR systems.

Who has power in Madison,

and what are they doing with it?

Help us create fiercely independent politics coverage that tracks power and policy.